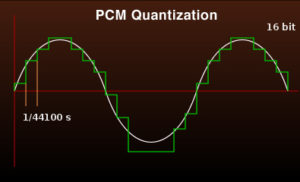

THE CD RECORDING STANDARD WAS FIRST DEFINED in the so-called “Red Book”, released in 1980 by Philips and Sony. The recording method is LPCM (Linear pulse-code modulation, often just referred to as PCM), which takes the original analogue wave signal, chops it up into slices, then registers the size of each slice as a series of numbers. Clearly, the finer the slices and the more precisely each slice is measured, the more accurate the recording will be.

THE CD RECORDING STANDARD WAS FIRST DEFINED in the so-called “Red Book”, released in 1980 by Philips and Sony. The recording method is LPCM (Linear pulse-code modulation, often just referred to as PCM), which takes the original analogue wave signal, chops it up into slices, then registers the size of each slice as a series of numbers. Clearly, the finer the slices and the more precisely each slice is measured, the more accurate the recording will be.

But too many fine slices measured too precisely could produce data overkill. This was a particular problem back in the ’80s when the data capacity of a CD was limited to around 800MB, reduced to 700MB allowing for error correction. The number of slices is a function of how often you choose to sample the signal (the sample rate) and the Philips/Sony compromise set this as 44,100 samples per second, or 44.1kHz.

The numerical value of each slice is recorded as a binary number, a string of ones and zeros. The longer the string, the more precise the measurement. Here again, a compromise is needed. Philips/Sony settled on a string 16 bits long, technically called “16-bit quantization”.

Audio Quantization is very like the choice of how many different levels of grey you might allow in the reproduction of a black and white photo. 16 levels of grey will give you a better sense of the light and dark areas than 8 levels. Screwing that up to 256 levels of grey provides a convincing imitation of an analogue monochrome photograph, but if you’re a radiologist inspecting the detail in an X-ray you’ll want at least 64,000 levels of grey

16/44 PCM, as the CD standard is sometimes called, was chosen because the 44.1kHz sample rate accurately covers the 20-20kHz range of human hearing and the 16-bit quantization records a range of amplitude that matches the normal volumes of domestic listening.

CDs and MP3s

Since 1980, video reproduction has escalated from 576-line TV, to HD and now to 4K. During the first half of this era audio quality, on the other hand, took a dive as low bit-rate MP3s proliferated.

MP3 audio compression as a concept is brilliant. By mathematically discarding elements of the soundscape that for a variety of reasons (including, but not limited to pitch) the human ear won’t appreciate, it can produce an audibly very similar track that may be five to ten times smaller than the LPCM original. Towards the end of the last century, when storage was tight, this compression was very welcome. But MP3s often used very low bitrates (128kbps and below) that squeezed the life out of the music. Given sufficient headroom (192kbps and above) MP3s can shine, while still providing very useful compression.

Many of the first generation CD recordings were of poor audio quality as sound engineers struggled to get to grips with the new technology. Pundits were quick to point the finger at CD’s 16/44 Red Book standard, although the blame lay elsewhere in the chain of reproduction. The science tells us that, although a necessary compromise 35 years ago, 16/44 recording appears to be finely tuned to human physiology.

FLAC

Plummetting storage costs and soaring capacities have opened up the opportunity for fresh thinking about how we record and store audio. We can say goodbye to those mushy sub-160kbps MP3s and save our CD tracks as MP3s at 360kpbs. Better still we can use FLAC (the Free Lossless Audio Codec) that can shrink down LCPM to less than half its size without throwing away any of the original data).

Introduced at the beginning of this century by the software engineer Josh Coalson, FLAC is a fast, very widely supported codec. It’s free of proprietary patents, very well documented and with an open source reference implementation that manufacturers and application developers are free to use without legal entanglement (unlike, for example, MP3 or Apple’s AAC).

Also unlike MP3, FLAC has no “quality settings” that trade off audio fidelity against file size. However, the same audio file can be compressed to different FLAC “levels”, resulting in smaller or larger files. The levels run from 0 (least compressed) to 8 (most compressed) and the trade-off here is simply the time taken to encode and the processing power needed to decode the audio track.

In the main, FLAC seems to be the first choice of audiophiles. FLAC compression using 16/44 (16bit quantisation of 44.1 KHz sampling) will preserve all the information on a CD while squishing down the file size. This isn’t “hi-res”, but the format can usefully be pushed to 24/96 if your input is faithfully recorded vinyl. As a medium, analogue vinyl has the capability of carrying more data than CD (which is not to say that in any particular instance it does), although it introduces problems of its own, particularly as cross-talk, the tendency of two supposedly separate stereo channels to impact on each other.

Hi-Res Audio

Compression of an appropriate signal at 24/96 FLAC could be considered as “entry-level hi-res”. The stuff the manufacturers want to push at you will often claim to be able to capture even more music data than that.

Hi-Res equipment and content vendors want us to throw off the shackles of that 1980s Red Book standard and slice our analogue waveforms faster and quantify more precisely.

But does this really bring any advantage? Audio engineer and founder of the boutique manufacturing company Mojo Audio, Benjamin Zwickel, doesn’t think so. Zwickel seems to be talking in terms of living room amplification through loudspeakers. It’s feasible that different principles may apply to delicately tuned balanced armature transducers (like the Nuforce HEM4s) playing directly into your ear.

However, even that proposition is brought into question by Monty (monty@xiph.org). His closely argued critique of hi-res (withdrawn from the Web but still happily available through the Wayback Machine)is worth a read in its entirety. I was particularly struck by his argument that while “golden ears” probably do exist among the general population, the talent has nothing to do with the ability to hear outside the normal range of human audible frequencies.

It’s certainly right to view studio recording in a completely different light. There’s every reason to make sure master recordings capture all possible nuances of the music, including supersonic frequencies which may or may not have an effect on human hearing. The science behind this is still in the exploratory stages.

DSD

In the 1990’s Philips and Sony were looking for a way to get this expanded studio sound out to consumers. (And, dare we say, persuade CD customers to buy their collections all over again in a sparkling new format).

In the 1990’s Philips and Sony were looking for a way to get this expanded studio sound out to consumers. (And, dare we say, persuade CD customers to buy their collections all over again in a sparkling new format).

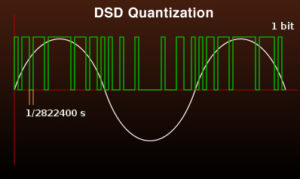

What they came up with was a radically new delivery system called Super Audio CD (SACD). This used a recording method called Direct Stream Digital that was entirely different from LPCM.

It takes samples 64 times faster than CD but the quantisation of each sample is only one bit. A CD sample can store 216 (or 65,536) distinct values. A DSD sample has a range of only two possible values, 0 or 1.

DSD and its variants (DSD128, DSD256 and DSD512—in each case the terminating digits indicate how much faster the sampling rate is compared with the CD standard) raise the question: How can you quantize the size of a sample in a single bit?

Good question. You don’t.

It’s the entire stream of single bits that carries the information. A burst of ones will mean the value is ascending; a burst of zeros will mean its falling. If the stream alternates between zeros and ones the value is remaining flat.

One way of looking at the difference between CD’s PCM sampling and DSD is to think of the string of CD samples as schoolchildren queuing up to have their height measured. Matron uses a six foot rule, and carefully measures each child to the nearest 8th of an inch.

One way of looking at the difference between CD’s PCM sampling and DSD is to think of the string of CD samples as schoolchildren queuing up to have their height measured. Matron uses a six foot rule, and carefully measures each child to the nearest 8th of an inch.

That was last year. This year the school has suddenly expanded, with 64 times the number of pupils. The new DSD matron gets through them all a lot quicker now by simply making a note whether each child is taller than, shorter than or the same height as the previous child in the queue.

This analogy, as a moment’s thought will tell you, fails completely for a string of random values like this. The technique, called delta-sigma processing, assumes there will be some sort of relationship between the consecutive values in the stream. The sort of relationship you would find with samples extracted in rapid succession from a continuous waveform. In this case, delta-sigma processing can produce a very accurate digital representation of the original analogue signal.

This analogy, as a moment’s thought will tell you, fails completely for a string of random values like this. The technique, called delta-sigma processing, assumes there will be some sort of relationship between the consecutive values in the stream. The sort of relationship you would find with samples extracted in rapid succession from a continuous waveform. In this case, delta-sigma processing can produce a very accurate digital representation of the original analogue signal.

How accurate? More accurate than, say 24/96 FLAC? Again, Benjamin Zwickel of Mojo Audio (from whom I’ve borrowed the PCM versus DSD charts above) steps in with a note of scepticism.

Introduced con brio at the end of the last century as the future of digital audio disks, SACD is now largely abandoned. DSD lives on as a niche format for music downloads.

DXD sometimes escapes from the studio into distribution as the very highest quality transfer format. Due to its very expansive nature, in distribution it will usually be compressed losslessly using FLAC. Some sources are now touting this as “the future of digital audio”.

Conclusion

For many music lovers the focus is moving away from owning a collection. Subscription services are the trend. My Yamaha RX-V679 offers me Napster, Spotify and Juke over the Internet, but I much prefer to tap into my own music stored locally on my NAS devices.

If you’re like me, here’s my personal view: stick to FLAC. At 16/44 FLAC will faithfully reproduce your CD collection. If you’re digitising vinyl there’s probably a good case for FLACcing at 24/96.

If you own, or aspire one day to own, a $75,000 speaker system backed by equivalent amplifiers and renderers to a total of, say, $200,000, you’ll probably think nothing of buying your entire music collection all over again as DSD or whatever the HiDef standard du jour might be (MQA emerged recently, and I’ll write more about it here if it turns out to have legs).

But if that’s you, you’re not like me.

Chris Bidmead: 7 Dec 2016