Our data sheet covering the established audio formats —nearly two years old at the time of writing this—is here. MQA gets a last-minute mention there. But in the interim, the technology has taken a slightly steadier hold on the market with its adoption by the streaming service Tidal, with promises of other similar services to follow.

The future of MQA is still up for grabs (as, indeed, seems to be the future of Tidal). For this reason, we’re writing it up as a separate supplement, rather than editing it into the original.

What and Why is MQA

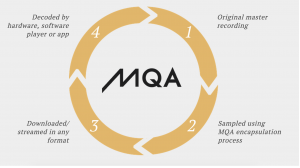

THE INITIALS MQA STAND FOR Master Quality Authenticated. This proprietary method of compressing a hi-res audio stream or file to minimise bandwidth and/or storage requirements was devised by the Meridian Group here in the UK and first demonstrated publicly at the Consumer Electronics Show in Los Angeles in 2015.

It’s based on the general hi-res assumption that the Red Book standards devised for the introduction of CDs back in the early ’80s are no longer fit for purpose, at least as far as true hi-fi evangelists are concerned. CDs capture frequencies up to 20KHz, slicing the incoming audio signal into separate samples approximately 44 thousand times a second and recording the value of each slice as a 16-bit number. This process effectively filters the original signal and its maths captures only an approximation to the waveforms entering the system. The idea behind the Red Book standards was that this approximation would be indistinguishable to the human ear from the real thing.

If you go back to the Red Book, speed up the sample rate and allocate more data bits to storing the value of each sample (called “increasing the quantization”) you can reach up beyond the 20KHz traditionally believed to be the upper limit of human hearing to capture those numinous overtones said by certain audiophiles to add “presence”. You also get generally cleaner waveforms, with fewer artefacts like pre-echoes that can appear when the digital data is converted back to analogue.

MQA’s solution to the problem of the larger file sizes this creates is—as you might expect—to employ compression. This isn’t the psycho-acoustic compression of MP3 or even the lossless, purely mathematical compression of FLAC. It’s somewhere between the two.

Scores from the Asphalt 8 racing game. We can compress the size of the table if we sacrifice some of the least significant digits of the timings.

One way of explaining MQA’s compression is to think of a car racing game that keeps a record of the times of the top four winners. You’ll notice from the picture that the times listed on the right-hand side of the table include the seconds to three places of decimals (the colon here is acting as a decimal point). In this case, these three “least significant digits” can safely be ignored for the purpose of deciding the rankings.

But as we’ve got space for these redundant numerals, why not subvert them to carrying other data about the race? Provided there are never more than 99 participants in these races, we could compile a separate list of drivers, give each a number and then use that number in place of the two least significant digits, preserving the digit immediately following the decimal point as a time value just in case. We could then remove the whole Player column, compressing the table without losing any information.

No, you’re right—we are trashing some information in the process. We’re compromising quantisation of the timings, but who’s going to notice?

So the ultrasound data is smuggled in under the audible frequencies. MQA evangelists have added a touch of marketing magic by naming this procedure after the Japanese art of paper folding. They call it “the Origami process”.

Playback now has the option of two different ways of turning these data back into analogue audio. It can either say: Here’s a regular CD track, I’ll just play it. Or it can say, Ah-ha, this is that MQA stuff with extra hi-def information encoded. Let’s strip out those least significant bits, decode them, and stitch the original signal back together again.

The stripping and decoding part of this is processor-intensive. The restitching (MQA calls it “rendering”) is the easy part of the job. It’s a game of two halves and knowing this is important to understanding the workings of MQA hardware devices like the iFi nano iDSD Black Label DAC.

A key selling point here is MQA’s compatibility with regular CDs. Or, if the signal is being streamed, its ability to be unwrapped from a losslessly compressing container like a FLAC into the CD equivalent with no special decoding needs.

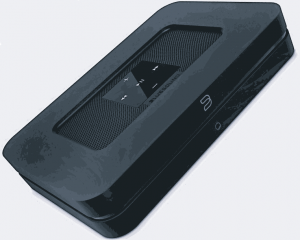

An Internet music streamer that will do both MQA decoding and rendering, the Bluesound Node 2 sells for £500.

The raised noise floor* is regarded as being acceptable, the assumption being that if you’re not into hi-res you won’t mind a bit of low-level noise. You may be listening in a noisy environment; your less than sterling audio equipment will probably be introducing noise of its own; and if you do happen to be paying enough attention to sense the noise, it will probably disappear when you turn down the volume a jot.

So this is MQA’s “CD-compatibility”. The real MQA aficionados are promised something a lot better.

At the top end, you’ll have a dedicated MQA decoder/renderer like the Bluesound Node 2 that has the whole MQA schmeer built into it. At the cheaper end of the market, devices like the iFi nano iDSD Black Label DAC will expect to receive the data already decoded, typically by software running on a computer or a phone. Devices in this category are called MQA renderers because they only do the stitching together part before turning the signal from digital into analogue.

Foot Surgery

If you’ve been following this closely, you’ll have spotted a possible Achilles’ heel of MQA—the fact that those random least significant bits in the lower octaves are still there, compromising the quantisation and introducing noise.

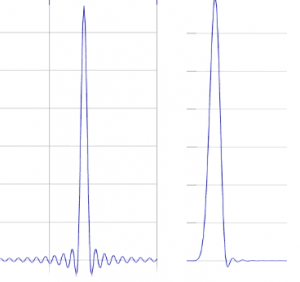

A transient before and after apodisation

The official MQA argument seems to be that within the widened scope of the now much-improved audio fidelity these errors will have little or no impact on human hearing. And while it’s employing elaborate maths to unwrap those higher frequencies it’s also cleaning up some of the infelicities that Red Book-style processing tends to introduce. In particular, the small pre- and post-echoes that creep in around transients, the peaks of energy in the signal.

When a plectrum hits a guitar string it produces a hard sounding momentary “attack” followed by a much smoother sound that slowly dies away, the “sustain”. The attack is a typical transient.

The translation from analogue to digital and back to analogue that’s inherent in all modern audio recording and transmission technologies tends to introduce these anomalies that appear in graphical representations as spurious small “feet” on either side of the transient. The trailing foot will seldom be much of a problem—for one thing, it’s masked by the sustain that usually follows, and if there’s no sustain (as with a heavily damped cymbal, for example), the ear may well accept it as a natural-sounding ambient echo.

The leading foot, the pre-echo, is a much more obtrusive artefact. Part of MQA’s claim to fame is the ability to remove both kinds of feet from transients using a process called “apodisation”. If you know your ancient Greek you’ll recognise this as meaning “foot removal”.

DAC Matching

MQA claims to do one more important clean-up. Every DAC (like every item of electronics ever made—including even the odd bit of wiring) imposes its own characteristics on the signal it’s handling. Because the organisation behind MQA certifies all equipment using its logo, it is able to define the list of qualifying DACs.

Apodisation and other filtering take place in the rendering section of the MQA playback process. So whether the decoding and rendering are inherent in the MQA hardware (as with the Bluesound Node 2) or divided between hardware and software (which is how portable devices like the iFi nano iDSD Black Label DAC are likely to be designed), it would seems that the MQA organisation should always able to ensure that the filtering gives the best possible match to the particular DAC handling the output stage.

However, the MQA literature seems to suggest that the closest DAC matching only applies to integrated devices that handle both the decoding and rendering. If I understand the available specs correctly (please feel free to comment if you think I’m wrong) with render-only devices like the iFi nano iDSD Black Label DAC the DAC-matching is less precise.

Either way, the result, according to MQA evangelists, is an output signal certified to be the closest possible match to what was laid down in the studio. An indicator lamp on the MQA output device lights up with a particular dedicated colour to certify this. And that’s the Authentication in Master Quality Authenticated.

Ah, But…

Audiophiles are divided on the value of, and even the raison d’être for, MQA. Reports based purely on listening sessions come under some suspicion, particularly when they claim that in A-B tests against the studio’s original recordings MQA’s output sounds even better! The rationale is that MQA’s final, pre-DAC filter clean-up actually improves the sound.

Qualified sound technicians have pointed out that the introduction of errors can indeed give the subjective impression that the music is somehow “better”. Consider the post-echo we discussed under apodisation. There’s no question that this is an error, yet by somewhat brightening the acoustic it might well be judged by some listeners as an improvement. And a filter that happened to emphasise and extend post-echoes like these might sound even better to some ears.

Other assessors of MQA have taken a more objective approach: examining the theory of MQA (as far as it is known—I understand much of the key technology is under proprietary wraps) and performing technical analysis of the waveforms MQA produces. One such detailed analysis is from a contributor to Computer Audiophile.

1997 marked the introduction by IBM of the first hard drive to use giant magnetoresistive (GMR) heads, which promised a huge increase in drive capacity. IBM’s 3.5-inch GMR Titan disk drive was just over 16.8GB. The first terabyte drive didn’t appear until 2007. Today’s drives can pack almost 1000 times the Titan’s capacity into the same physical form factor.

While Netflix regularly streams reasonably decent 4K video content to its customers at around 15Mb/sec for $12 per month, the Tidal high quality audio streaming service at $20 per month currently only needs a tiny fraction of this bandwidth, less than a tenth, in fact.

In response, he argued that not every country is as lucky as ours with their Internet bandwidth, and compression will always have value somewhere in the world. Chrislu’s views certainly command respect. A BSc in Electrical Engineering, he was, for the best part of the ’90s, Frank Zappa’s in-house sound engineer. But there’s an uncomplicated rebuttal to his argument here: A country unable to supply bandwidth for Internet video streaming is unlikely to be a market for streamed hi-res audio in the first place. At least at the sort of monthly prices Tidal is charging.

MQA Meets CD

On March 17 of last year, a Japanese label called Ottava released the world’s first MQA compact disc, A. Piazzolla by Strings and Oboe. The label’s CEO, Mick Sawaguchi, had first encountered MQA the previous year, when Bob Stuart, founder and chief engineer of Meridian Audio, gave a presentation to the Japan Audio Society.

“This Ottava MQA CD starts from a 176/24 master,” Bob Stuart explains. “The Origami process is used to fold the audio into a 44.1kHz file which can be post-processed to provide a 16-bit MQA file.”

So MQA is being used to compress music to fit hi-res audio onto a CD. Why, for heaven’s sake? You could as get much capacity as you’d ever need for hi-res audio onto the same physical size disc by using Blu-ray.

Are you saving money? CDs are cheaper than Blu-rays, true. And your Piazzolla disc will certainly play on your regular CD player. Should you want to listen to it in its full MQA glory you might want to check out their 808v6 Reference CD Player. The official price is £11,000. But the good news is that, at the time of writing, it’s on offer from Audio Affair in their Warehouse Clearance for just £9,995.00.

Conclusion

MP3 was a miraculous way of compressing music files back in the late ’90s when we really needed it. Over the years lossy compression has become less and less necessary. At the same time, the scalability of the format means that if you have more bandwidth/storage to spare you can still use MP3 at, say 256kbps or above to store or stream very respectable music quality.

The big snag with MP3, as with any lossy compression format, is that any subsequent conversions will result in a further loss of quality. It’s no way for a studio to store master tracks. And at home, it’s no way to store tracks you might hope later to convert to a more modern format.

So if you’re currently crunching your CD or vinyl collection to MP3s before stacking them in the attic—STOP! A lossless format like FLAC is what you should be using.

What has this got to do with MQA? It’s a reminder that in many ways MQA is the new MP3. More musically competent, certainly. But also more complicated, with more proprietary secrets and with no open source equivalent like LAME tracking it in the free software community. And also, like MP3, lossy.

Chris Bidmead: 4 Aug 2018