Rereading those notes on seeking help I wrote—gosh—six years ago, I realise how things have changed. The advent of AI has added a whole new tier to the help desk: an automated FAQ that discusses your problem in a reasonably human mode. In many cases this is an improvement on the old low-wage Tier 1 hired help working from a script. But I’d no longer recommend a company’s help desk as your first port of call.

And I’m starting to feel much the same about forums. They’re a mixed bunch and you need to approach them critically. If you find one relating to your issue that’s responsive, where well-shaped, intelligent questions are answered on point, you’re lucky. Sign up; stick with it. The big mistake you can make is forum tourism. You plug your error message into Google search, get a string of hits from a dozen forums and find yourself burrowing through a warren of rabbit-holes.

How so? What happens is this: Your “problem” is something you think of as “out there in the world”. But if you’ve read the Tested Technology piece on “Keeping the Kit Happy”, you’ll understand that there are very likely two main components to any problem. There’s the what’s-not-working-out-there component but there’s also the what-I-don’t-understand component in your own head. The internal WIDU component.

You’re human. And so are the folks discussing your problem in the forums. The chances are high that those who’ve run into the same external component of the problem have much the same WIDU as you.

This pretty much guarantees that any problem you hit will be echoed across the Internet by solution seekers who all have a similar WIDU mind-set. Which is how the “this product is complete rubbish” wildfire so easily rages over the World Wide Web.

You’d be right to stop me at this point to remind me that this WIDU has been the engine of modern science since the days of Isaac Newton. But there’s a very important distinction between the WIDU you know and the WIDU you don’t know. Knowing what you don’t know is how science gets started. When you don’t know what you don’t know—WIDU squared, you might say—the deep rabbit-holes open.

So googling your problem is less likely to produce an answer than to confirm, yes, it’s a real problem that everybody seems to have.

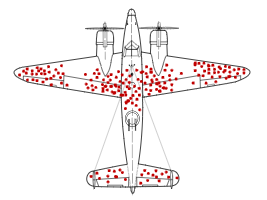

The relevance of this picture is explained in the Wikipedia entry it’s taken from.

What do we do about this? The advice in “Data Sheet: Help!” remains true: understand your problem as thoroughly as you can and share it with as much relevant detail as you can muster. This is the best way to engage the interest of other forum members.

But there’s another approach you can take, which I find I’ve been using more and more this year. It’s something I touched on in the opening paragraph. Companies may now be using AI as their Tier 1 defence. But we can use it, too. Directly and effectively.

At the time of writing, ChatGPT, Claude, Groq and Fiona are all available AI engines that can be accessed without subscription as long as you don’t exceed the different limit each imposes on free exchanges. If you reach your quota with one it should be easy enough to switch to another.

They’re not all equally reliable and it’s best to treat their responses with caution. The primary goal of all AI engines (in 2024) is to be plausible; they have no way of being truthful. If a plausible response happens to be accurate and useful that’s a win. And wins, I’ve found, are not uncommon.

The big win, whatever the outcome of a particular line of enquiry, is that you get a free, very valuable course in rubber ducking.

In case you haven’t followed that link to Wikipedia—but you should—rubber ducking (or more fully, rubber duck debugging) is the process of explaining a problem in natural language to the inert toy of your choice. You do this in sufficient detail for the toy to offer you a solution.

If the toy remains unresponsive you will probably need to dig deeper into the problem. Rinse and repeat.

As you will have guessed, this has never been known to work exactly as described. What happens instead is that the light you throw on the problem to clarify it for your unresponsive toy, also illuminates it for you. Perseverance will deliver your eureka!

That was how we were still doing it in the early 21st century. But today’s rubber duck need not be unresponsive. Try the same thing on ChatGPT, Claude, Groq or Fiona. The rubber duck talks back to you.

That’s not entirely the advantage you might think. AI is unlikely to insult you by suggesting you’re posing a foggy question. It prefers to apologise for misunderstanding you. You’ll need to learn to interpret these apologies as nudges towards clarification. The fundamental principle here is that—exactly as with your conversations with the bath toy—the ultimate illumination will not come from “out there”. It will be in your own head.

The foregoing was read by Google’s NotebookAI, which added the following paragraph. It points out that unlike conventional rubber ducking the conversation with AI can become a two-way street: Mistaken replies from AI, while sometimes frustrating, can actually be valuable tools for refining the conversation towards more useful results. By identifying and correcting inaccuracies or misunderstandings in the AI’s responses, users can guide the AI towards a more accurate and helpful understanding of their needs. This process of clarification, much like the “rubber ducking” method described in the source, helps illuminate the problem not just for the AI, but for the user as well. Each correction acts as a nudge, pushing the AI towards a more comprehensive and insightful response.

Steering AI Conversations

Chris Bidmead 19-Oct-24